Web3D using software rendering

Nowadays, if people would like to implement a Web3D demo, most of them would use WebGL approach.

WebGL seems to be the spec of Web3D, because Chrome, Firefox, Safari, IE, and Android Chrome have supported it. iOS 8 will be launched this year, and WebGL also can be executed on iOS 8 Safari.

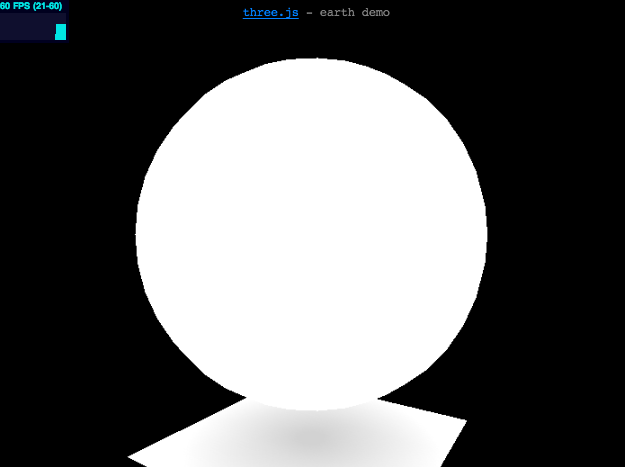

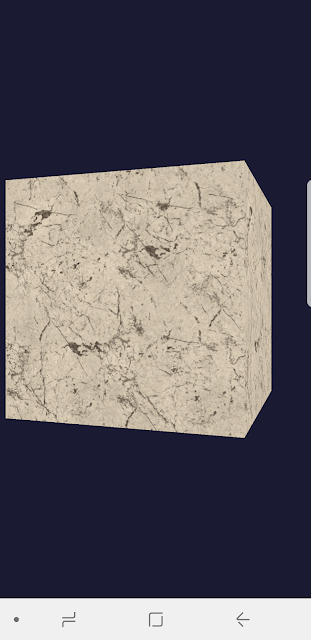

In order to support iOS 7 pervious version for Web3D, I survey the Software Renderer method of three.js and help them add texture mapping and pixel lighting.

First, we get the image data from canvas.

var context = canvas.getContext( '2d', {

alpha: parameters.alpha === true

} );

imagedata = context.getImageData( 0, 0, canvasWidth, canvasHeight );

data = imagedata.data;

Second, we have to project the faces of objects to the screen space, we needn't sort them by painter algorithm, because three.js has implemented a screen size z buffer to store the depth values for depth testing.

And then, start to interpolate the pixels of these faces.

var dz12 = z1 - z2, dz31 = z3 - z1; var invDet = 1.0 / (dx12*dy31 - dx31*dy12); var dzdx = (invDet * (dz12*dy31 - dz31*dy12)); // dz per one subpixel step in x var dzdy = (invDet * (dz12*dx31 - dx12*dz31)); // dz per one subpixel step in y

Using the same way to interpolate texture coordinate and vertex normal.

var dtu12 = tu1 - tu2, dtu31 = tu3 - tu1; var dtudx = (invDet * (dtu12*dy31 - dtu31*dy12)); // dtu per one subpixel step in x var dtudy = (invDet * (dtu12*dx31 - dx12*dtu31)); // dtu per one subpixel step in y var dtv12 = tv1 - tv2, dtv31 = tv3 - tv1; var dtvdx = (invDet * (dtv12*dy31 - dtv31*dy12)); // dtv per one subpixel step in x var dtvdy = (invDet * (dtv12*dx31 - dx12*dtv31)); // dtv per one subpixel step in y var dnz12 = nz1 - nz2, dnz31 = nz3 - nz1; var dnzdx = (invDet * (dnz12*dy31 - dnz31*dy12)); // dnz per one subpixel step in x var dnzdy = (invDet * (dnz12*dx31 - dx12*dnz31)); // dnz per one subpixel step in y

Get the left/top corner of this area.

var cz = ( z1 + ((minXfixscale) - x1) * dzdx + ((minYfixscale) - y1) * dzdy ) | 0; // z left/top corner var ctu = ( tu1 + (minXfixscale - x1) * dtudx + (minYfixscale - y1) * dtudy ); // u left/top corner var ctv = ( tv1 + (minXfixscale - x1) * dtvdx + (minYfixscale - y1) * dtvdy ); // v left/top corner var cnz = ( nz1 + (minXfixscale - x1) * dnzdx + (minYfixscale - y1) * dnzdy ); // normal left/top corner

Divide the screen into 8x8 size blocks, and draw the pixels in the blocks.

for ( var y0 = miny; y0 < maxy; y0 += q ) {

while ( x0 >= minx && x0 < maxx && cb1 >= nmax1 && cb2 >= nmax2 && cb3 >= nmax3 ) {

// Because the size of blocks are 8x8, we have to scan them 8 x 8 pixels

for ( var iy = 0; iy < q; iy ++ ) {

for ( var ix = 0; ix < q; ix ++ ) {

if ( z < zbuffer[ offset ] ) { // Checking z-testing

// if passed, write depth to z buffer, and draw pixel

zbuffer[ offset ] = z;

shader( data, offset * 4, cxtu, cxtv, cxnz, face, material );

}

}

}

}

}

Put the image into canvas.

context.putImageData( imagedata, 0, 0, x, y, width, height );

If we want to support texture mapping, we have to store the texels into a texel buffer, like this way:

var data;

try {

var ctx = canvas.getContext('2d');

if(!isCanvasClean) {

ctx.clearRect(0, 0, dim, dim);

ctx.drawImage(image, 0, 0, dim, dim);

var imgData = ctx.getImageData(0, 0, dim, dim);

data = imgData.data;

}

catch(e) {

return;

}

var size = data.length;

this.data = new Uint8Array(size);

var alpha;

for(var i=0, j=0; i < size; ) {

this.data[i++] = data[j++];

this.data[i++] = data[j++];

this.data[i++] = data[j++];

alpha = data[j++];

this.data[i++] = alpha;

if(alpha < 255)

this.hasTransparency = true;

}

Computing pixel color with texels:

var tdim = material.texture.width;

var isTransparent = material.transparent;

var tbound = tdim - 1;

var tdata = material.texture.data;

var texel = tdata[((v * tdim) & tbound) * tdim + ((u * tdim) & tbound)];

if ( !isTransparent ) {

buffer[ offset ] = (texel & 0xff0000) >> 16;

buffer[ offset + 1 ] = (texel & 0xff00) >> 8;

buffer[ offset + 2 ] = texel & 0xff;

buffer[ offset + 3 ] = material.opacity * 255;

}

else {

var opaci = ((texel >> 24) & 0xff) * material.opacity;

if(opaci < 250) {

var backColor = buffer[ offset ] << 24 + buffer[ offset + 1 ] << 16 + buffer[ offset + 2 ] << 8;

texel = texel * opaci + backColor * (1-opaci);

}

buffer[ offset ] = (texel & 0xff0000) >> 16;

buffer[ offset + 1 ] = (texel & 0xff00) >> 8;

buffer[ offset + 2 ] = (texel & 0xff);

buffer[ offset + 3 ] = material.opacity * 255;

}

And if you want to support lighting, we first store lighting color in the palette:

var diffuseR = material.ambient.r + material.color.r * 255;

if ( bSimulateSpecular ) {

var i = 0, j = 0;

while(i < 204) {

var r = i * diffuseR / 204;

var g = i * diffuseG / 204;

var b = i * diffuseB / 204;

if(r > 255)

r = 255;

if(g > 255)

g = 255;

if(b > 255)

b = 255;

palette[j++] = r;

palette[j++] = g;

palette[j++] = b;

++i;

}

while(i < 256) { // plus specular highlight

var r = diffuseR + (i - 204) * (255 - diffuseR) / 82;

var g = diffuseG + (i - 204) * (255 - diffuseG) / 82;

var b = diffuseB + (i - 204) * (255 - diffuseB) / 82;

if(r > 255)

r = 255;

if(g > 255)

g = 255;

if(b > 255)

b = 255;

palette[j++] = r;

palette[j++] = g;

palette[j++] = b;

++i;

}

} else {

var i = 0, j = 0;

while(i < 256) {

var r = i * diffuseR / 255;

var g = i * diffuseG / 255;

var b = i * diffuseB / 255;

if(r > 255)

r = 255;

if(g > 255)

g = 255;

if(b > 255)

b = 255;

palette[j++] = r;

palette[j++] = g;

palette[j++] = b;

++i;

}

}

At run time, fetching lighting color according the pixel normal

var tdim = material.texture.width;

var isTransparent = material.transparent;

var cIndex = (n > 0 ? (~~n) : 0) * 3;

var tbound = tdim - 1;

var tdata = material.texture.data;

var tIndex = (((v * tdim) & tbound) * tdim + ((u * tdim) & tbound)) * 4;

if ( !isTransparent ) {

buffer[ offset ] = (material.palette[cIndex] * tdata[tIndex]) >> 8;

buffer[ offset + 1 ] = (material.palette[cIndex+1] * tdata[tIndex+1]) >> 8;

buffer[ offset + 2 ] = (material.palette[cIndex+2] * tdata[tIndex+2]) >> 8;

buffer[ offset + 3 ] = material.opacity * 255;

} else {

var opaci = tdata[tIndex+3] * material.opacity;

var foreColor = ((material.palette[cIndex] * tdata[tIndex]) << 16)

+ ((material.palette[cIndex+1] * tdata[tIndex+1]) << 8 )

+ (material.palette[cIndex+2] * tdata[tIndex+2]);

if(opaci < 250) {

var backColor = buffer[ offset ] << 24 + buffer[ offset + 1 ] << 16 + buffer[ offset + 2 ] << 8;

foreColor = foreColor * opaci + backColor * (1-opaci);

}

buffer[ offset ] = (foreColor & 0xff0000) >> 16;

buffer[ offset + 1 ] = (foreColor & 0xff00) >> 8;

buffer[ offset + 2 ] = (foreColor & 0xff);

buffer[ offset + 3 ] = material.opacity * 255;

}

Optimization: Using blockFlags array to manage which parts have to be cleaned, and blockMaxZ array records this block's depth. If depth > blockMaxZ[blockId], this block needn't to be drawn.

Comments

Post a Comment