AR on the Web

Because of the presence of Pokémon Go, lots of people start to discuss the possibility of AR (augmented reality) on the Web. Thanks for Jerome Etienne's slides, it brings me some idea to make this AR demo.

First of all, it is based on three.js and js-aruco. three.js is a WebGL framework that helps us construct and load 3D models. js-aruco is Javascript version of ArUco that is a minimal library for Augmented Reality applications based on OpenCV. These two project make it is possible to implement a Web AR proof of concept.

Then, I would like to introduce how to implement this demo. First, we need to use navigator.getUserMedia to give us the video stream from our webcam. This function is not supported on all browser vendors. Please take a look at the status.

The above code shows us how to get media stream in Javascript. In this demo, I just need video, and it will be sent to gotStream callback function. In gotSteam function, I give the stream to my video element that will be displayed on screen. And then, go to setupAR module. In setupAR(), I have to initialize my AR module, and setup my model and scene scale. Furthermore, I just need to wait the new videoStream coming and get my AR detect result from js-aruco at updateVideoStream() function.

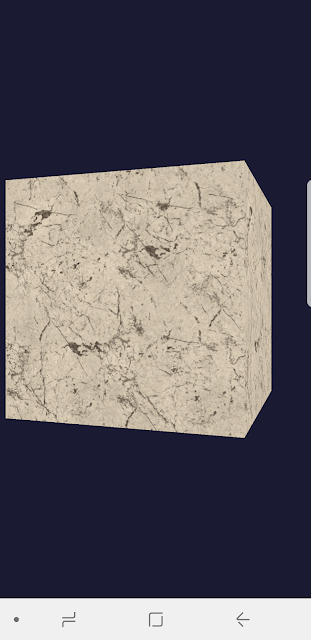

In updateVideoStream(), like the above picture, it draws the current videoStream to an imageData that is maintained by a Canvas2D. Go on, the imageData is sent to arDetector to investigate if there is any marker on it. It will return a marker array that contains markers are detected from this imageData. Every marker owns the corners (x, y) coordinate of a marker. We can use these corner coordinates to do lots of applications. In my demo, I draw the corners and the marker id on it. The most interesting part is we can leverage markers to update the pose of a 3D model.

At last, set the position to the 3D model.

Demo video: https://www.youtube.com/watch?v=68O5w1oIURM

Demo link: http://daoshengmu.github.io/ConsoleGameOnWeb/webar.html (Best for Firefox)

First of all, it is based on three.js and js-aruco. three.js is a WebGL framework that helps us construct and load 3D models. js-aruco is Javascript version of ArUco that is a minimal library for Augmented Reality applications based on OpenCV. These two project make it is possible to implement a Web AR proof of concept.

Then, I would like to introduce how to implement this demo. First, we need to use navigator.getUserMedia to give us the video stream from our webcam. This function is not supported on all browser vendors. Please take a look at the status.

navigator.getUserMedia = ( navigator.getUserMedia ||

navigator.webkitGetUserMedia ||

navigator.mozGetUserMedia ||

navigator.msGetUserMedia);

if (navigator.getUserMedia) {

navigator.getUserMedia( { 'video': true }, gotStream, noStream);

}

The above code shows us how to get media stream in Javascript. In this demo, I just need video, and it will be sent to gotStream callback function. In gotSteam function, I give the stream to my video element that will be displayed on screen. And then, go to setupAR module. In setupAR(), I have to initialize my AR module, and setup my model and scene scale. Furthermore, I just need to wait the new videoStream coming and get my AR detect result from js-aruco at updateVideoStream() function.

In updateVideoStream(), like the above picture, it draws the current videoStream to an imageData that is maintained by a Canvas2D. Go on, the imageData is sent to arDetector to investigate if there is any marker on it. It will return a marker array that contains markers are detected from this imageData. Every marker owns the corners (x, y) coordinate of a marker. We can use these corner coordinates to do lots of applications. In my demo, I draw the corners and the marker id on it. The most interesting part is we can leverage markers to update the pose of a 3D model.

POS.Posit gives us a library to assist us get the transformation pose from the corners. In a pose, it contains a rotation matrix and a translation vector in a 3D space. Therefore, it is very easy for us to show a 3D model on markers except we need to do some coordinate conversion. First, we need to keep in mind video stream is in a 2D space, so it makes sense that we have to transform the corners to 3D space.

Moreover, we need to apply this rotation matrix to the 3D model's rotation vector.

|

dae.rotation.x = -Math.asin(-rotation[1][2]);

dae.rotation.y = -Math.atan2(rotation[0][2], rotation[2][2]) - 90;

dae.rotation.z = Math.atan2(rotation[1][0], rotation[1][1]);

At last, set the position to the 3D model.

dae.position.x = translation[0];

dae.position.y = translation[1];

dae.position.z = -translation[2] * offsetScale;

Demo video: https://www.youtube.com/watch?v=68O5w1oIURM

Demo link: http://daoshengmu.github.io/ConsoleGameOnWeb/webar.html (Best for Firefox)

美國大陰莖 https://www.tw9g.com/goods/pro329.html

ReplyDelete