How to train custom objects in YOLOv2

This article is based on [1]. We wanna a way to train the object tags that we are interested. Darknet has a Windows version that is ported by AlexeyAB [2]. First of all, we need to build darknet.exe from AlexeyAB to help us train and test data. Go to build/darknet, using VS 2015 to open darknet.sln, and config it to x64 solution platform. Rebuild solution! It should be success to generate darknet.exe. Then, we need to label objects from images that are used for training data. I use BBox label tool to help me label objects' coordinates in images for training data. (python ./main.py) This tool's image root folder is at ./Images, we can create a sub-folder (002) and insert 002 to let this tool load all *.jpg files from there. We will mark labels in this tool to help us generate objects' region to mark where objects are. The outputs are the image-space coordinate in images and stored at ./Labels/002.

However, the format of this coordinate is different from YOLOv2, YOLOv2 needs the relative coordinate of the dimension of images. The BBox label output is

[obj number]

[bounding box left X] [bounding box top Y] [bounding box right X] [bounding box bottom Y], and YOLOv2 wants

[category number] [object center in X] [object center in Y] [object width in X] [object width in Y].

Therefore, we need a converter to do this conversion. We can get the converter from this script [4] add change Ln 34 and 35 for the path in and out. Then run python ./convert.py. Following, we have to move the output *.txt files and the *.jpg file to the same folder. Next, we begin to edit train.txt and test.txt to describe what images are our training set, and what are served as the test set.

In train.txt

data/002/images.jpg

data/002/images1.jpg

data/002/images2.jpg

data/002/images3.jpg

In test.txt

data/002/large.jpg

data/002/maxresdefault1.jpg

data/002/testimage2.jpg

Then, creating YOLOv2 configure files. In cfg/obj.data, editing it to define what train and test files are.

classes= 1

train = train.txt

valid = test.txt

names = cfg/obj.names

backup = backup/

In cfg/obj.names, adding the label names for training classes, like

Subaru

The final file, we duplicate the yolo-voc.cfg file as yolo-obj.cfg. Set batch=2 to make using 64 images for every training step. subdivisions=1 to adjust GPU VRAM requirements. classes=1, the number of categories we want to detect. In line 237: set filters=(classes + 5)*5 in our case filters=30.

Training

YOLOv2 requires a set of convolutional weights for training data, Darknet provides a set that was pre-trained on Imagenet. This conv.23 file can be downloaded (76Mb) from the official YOLOv2 website.

Type darknet.exe detector train cfg/obj.data cfg/yolo-obj.cfg darknet19_448.conv.23 to start training data in terminal.

Testing

After training, we will get the trained weight in the backup folder. Just type darknet.exe detector test cfg/obj.data cfg/yolo-obj.cfg backup\yolo-obj_2000.weights data/testimage.jpg to verify our result.

[1] https://timebutt.github.io/static/how-to-train-yolov2-to-detect-custom-objects/

[2] https://github.com/AlexeyAB/darknet

[3] https://github.com/puzzledqs/BBox-Label-Tool

[4] https://github.com/Guanghan/darknet/blob/master/scripts/convert.py

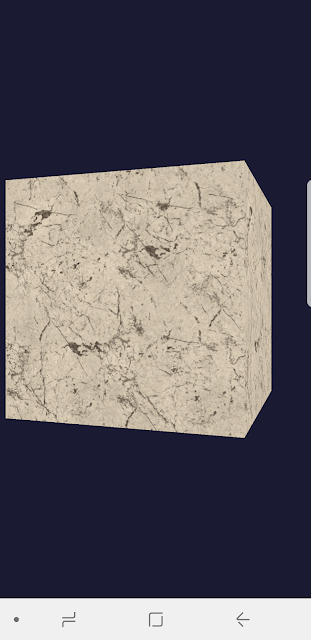

德國黑金剛 https://www.tw9g.com/goods/pro409.html

ReplyDelete