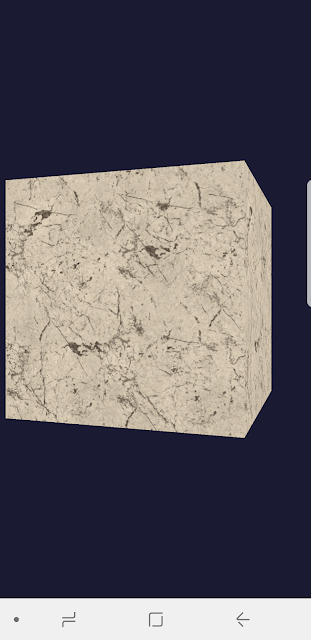

glTF loader for Android Vulkan

This post is going to introduce how we integrate with an existing glTF loader library to make it be able to show glTF models in our Vulkan rendering framework, vulkan-android . tinygltf In the beginning, we don't want to make our new own wheel, so choosing tinygltf as our glTF loader. tinygltf is a C++11 based library that would help us support all possible cross-platform project easily. tinygltf setup in Android Studio In vulkan-android project, we put third party libraries into third_party folder. Therefore, we need to include tinygltf from third_party folder in app/CMakeLists.txt as below. set(THIRD_PARTY_DIR ../../third_party) include_directories(${THIRD_PARTY_DIR}/tinygltf) Model loading from tinygltf We are going to load a gltf ASCII or binary format model from the storage. tinygltf provides two APIs , they are LoadBinaryFromFile() and LoadASCIIFromFile() respectively based on the extension name is *.glb or *.gltf. TinyGLTF loader will return a tiny...